Pierced by the Public Interest: How Two Lawyers Did What a Whole Legislature Couldn’t

A Win Sharp Enough to Believe In

It was the kind of win that makes a person believe in the legal system again—if only for a moment.

In a courtroom in California, Glen Summers and Karma Giulianelli of Bartlit Beck didn’t just take on Google. They ran it through, legally speaking. Their $314 million jury verdict was more than a headline—it was a historic ruling that declared, for the first time, that your cellular data is your property under California law. A tech giant was held accountable for the unauthorized consumption of user cellular data.

Yes, you read that correctly: your mobile data, that invisible trail of breadcrumbs Google hoovers up whether you’re checking email or just checking the weather, now has legal weight in California. And thanks to Glen and Karma, it also has a price tag.

Meanwhile, in Colorado, where legislators recently passed SB 24-205, a supposedly landmark AI accountability bill, the enforcement mechanism is...well, coming soon. Like your delayed Uber. Or a corporate apology.

Meet the Dragon Slayers

Summers and Giulianelli didn’t just file a lawsuit. They waged a digital crusade.

Their case set a critical precedent under California’s consumer protection laws, establishing that unauthorized data use can constitute property theft. They laid out exactly how Google’s Android system was designed to siphon off user data without permission, even when phones weren’t on Wi-Fi.

Their trial strategy was clear: user data is not abstract. It is consumed, monetized, and stolen. And a jury agreed.

Their lances? Legal precedent.

Their armor? California law.

Their impact? Historic.

And perhaps most importantly, they proved something bigger: that Big Tech doesn’t just fear Congress or regulators—it can be brought to heel by two lawyers with a sword and a strategy.

Meanwhile, Back in the Rockies…

Now, let’s talk about Colorado—a state that flirted with AI regulation greatness, then ghosted its most vulnerable residents.

SB 24‑205 put strong language on the books: high-risk AI systems impacting education, housing, and employment would finally be held accountable. But implementation stalled. Governor Polis, ever the innovation-friendly libertarian, reminded us that ambition only goes as far as donor comfort and budget votes allow. The bill won’t be enforced until February 2026—and even then, lawmakers refused to fund the Department of Law to actually do the job.

Attempts to fix that? Killed in committee.

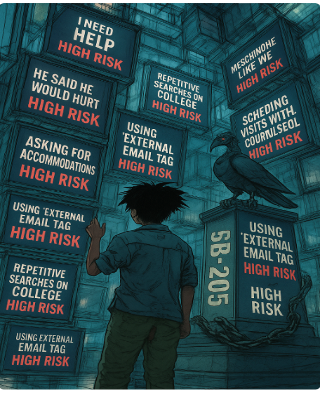

Attempts to apply the law to school surveillance systems that flag, sort, and profile students—especially those with disabilities or behavioral plans? Quietly buried.

Yet there’s still a path forward: the Attorney General already has the power to classify school surveillance tools as “high-risk AI” under the existing statute. These systems influence placement, discipline, and mental health escalation—and for many students, especially those already tracked by IEPs or flagged for support, that influence is far from hypothetical. It’s measurable. It’s lived. And it’s unregulated.

California Regulates, Colorado Sidesteps

Let’s be clear: California didn’t just pass laws—it enforced them. Its courts recognized data as property, its juries ruled on harm, and its plaintiffs walked away with a legally sanctioned sword hanging over Google's increasingly shaky halo.

Meanwhile, in Colorado, we have a beautiful bill and a governor who wants AI regulation to succeed… just not in any way that disturbs tech donors or existing contracts.

As CPR News reported, state lawmakers in 2025 rejected attempts to accelerate the implementation of SB 24‑205. Why? Because business groups claimed it would "chill innovation."

Right. And holding arsonists accountable might "chill warmth."

Why This Matters

This isn’t about dunking on Colorado—okay, it is, a little—but more importantly, it’s about recognizing the legal and strategic difference between passing a bill and executing it.

• California now has case law confirming digital data can be treated as stolen property.

• Colorado has a good policy with no enforcement mechanism until it’s politically safe.

And in the meantime, while Glen and Karma ride off victorious, kids across Colorado are still being flagged, profiled, and archived by AI systems their parents never signed off on—and their school boards barely understand.

Final Thoughts: When Law Is Actually a Sword

Summers and Giulianelli didn’t just litigate. They modeled what state-level consumer protection should look like in the age of algorithmic extraction.

So while California courts are issuing digital justice with the precision of a thousand-page brief, Colorado is still stuck debating whether the Grim Reaper’s scythe violates free enterprise.

Colorado passed a landmark AI bill—then decided not to fund its future.

California? It built a battering ram and handed it to two lawyers with enough backbone to swing it.

Because sometimes, the law doesn’t wear a robe.

Sometimes, it wears chainmail and carries a sword.